In a recent article, we wrote about numeric intuition and how we refer to large and very large numbers, either from the point of view of a mathematically or scientifically trained individual or not. We propose here a more ambitious theme, which is at the other end of the spectrum from a certain point of view. The thesis we advance from the very beginning is that everything is number, numeric in general. Throughout our everyday life, it takes only little until we reach the quantitative, hence the numerical. We wake up in the morning at a time indicated by some numbers on a screen or dial, and we organize our schedule around events which again contain time — numbers — and place. We prepare breakfast and any other meal considering numerical quantities of ingredients. We get ready for reaching someplace by researching the distance we must travel and the time frame it requires — both numerically expressed. Not to mention the whole economical system, from the banal purchases at the local shop all the way up to financial analysis of companies — they all have in common a reliance on numbers.

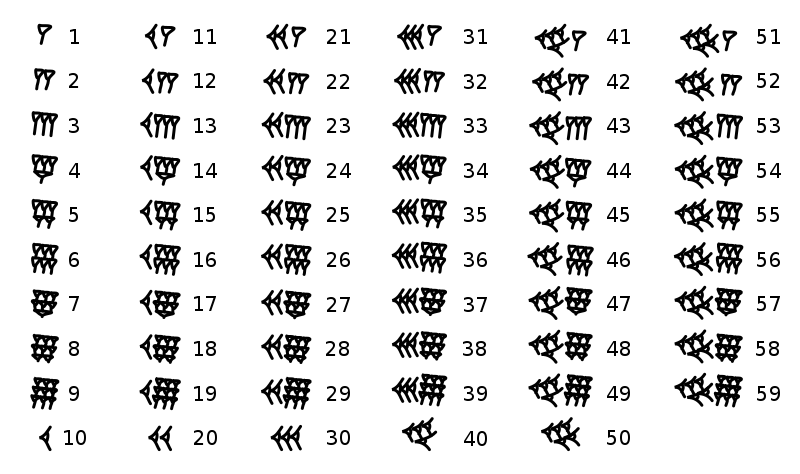

How did we reach this situation? It’s not hard to see: from the dawn of mankind, we needed systems which would allow us to keep track of objects and animals. We first used graphical signs, then conventional ones and finally, abstract signs. With writing systems like hieroglyphs, the prehistoric humans represented herds of animals and grains as pictures, then went to lines and other signs that are specific to cuneiform writing, which they grouped together and when they became too numerous, they used a single sign to represent a number.

The story unfolds in a well-known way, in numerical systems, based on everyday objects and the reality at hand — literally. Most peoples developed decimal systems or used a basis which is a multiple of five, taking inspiration from digit counting — to this day we still call numbers up to 10 digits. The Mesopotamians chose base 60 for surprisingly complicated reasons which are connected to astronomical observations. Furthermore, some researchers have argued that the reason is more subtle: the Babylonians knew that 60 is a number which has many useful divisors: its half, third, quarter, sixth and tenth fractions could be computed easily. Therefore, using such a number with rich arithmetic properties as a basis for their number system could have been a decision motivated by mathematics and pragmatism.

But let us do a thought experiment and push the possibilities to the extreme: could you think of a world which doesn’t use numbers at all? How would it look like? Do you think we would find replacements for numbers, with a similar function? In other words, do you see numbers as an inevitable step in the evolution of mankind as we know it, such that a parallel world which doesn’t contain numbers is, for all practical and theoretical intents and purposes, impossible? Most definitely the emergence of numbers was motivated by the inevitable contact with the quantitative. Hence a world devoid of numbers means a world without understanding and use for quantities. What else is there? Well, for example, the qualitative or the aesthetic. But is it feasible to live a life with no understanding or use for quantity?

The Romanian mathematician Liviu Ornea, a professor at the University of Bucharest, whom I had the honor and pleasure to study with, told me[1] in a casual conversation that in nature, there are no numbers except for zero and one. In other words, people are built to think binary: either an object exists (1), or it does not (0) — truth and falsity if we were to give it a philosophical flavor. In his vision (which I’m not sure he still holds, since the discussion I’m writing about dates back around ten years), what one calls “a number of objects” is, in fact, a repetition, a set of copies of the unit. We do not see four apples on a table, but four instances of one apple. Clearly all copies have specific properties, but mathematically speaking — that is, in an abstract and essential sense — we are speaking about “four ones”. The implications and tangents one can wander along are numerous here, among which there is Platonism, the belief in a unique Object, whose representations, that is imperfect copies, one sees around, but we don’t intend going that way. Let us keep in mind the idea that one could argue that natural numbers do not exist naturally, and it is only copies of the units that we see.

An immediate comment could be: okay, assuming we don’t have four apples on the table, but four copies of one apple — then the question “How many copies?” has the answer “four”, which is greater than one and hence we contradict the hypothesis and justify the need for numbers greater than one. There are two possible replies: first, it would be too much to think that a mathematician could hold that there don’t exist natural numbers larger than one. This misses the point. The argument is subtler: we restrict the discussion to physical reality. The fact that we see on the table four apples doesn’t necessarily make the number four exist physically, but that we choose to express mathematically, hence as the result of an abstraction, a physical reality through a term which happens to be a number greater than one. The second part of the reply is a bit more technical, and it uses tools that are specific to higher mathematics. However, they could be understood by comparison to grammar: numbers “in themselves” are different from numbers used for counting. In other words, there exist numbers and numerals, same as in natural language one could equally say “four apples” and “the fourth apple”. The first formulation contains the number four, whereas the second formulation contains the numeral fourth. Similarly in mathematics they are different and are called, respectively, cardinals and ordinals: the first being numbers “in themselves”, while the second are those used for counting or ordering. Therefore, to say that one sees four apples on the table, one must count them, which uses numerals: the first apple, the second, the third, the fourth. Only after reaching the numeral fourth can we speak about the (total) number of apples; in other words, the numeral precedes and implies the number, hence the number is a mere consequence.

If the discussion so far seems arid or just philosophical and semantical blabbering, let me steer it to another possibility. Multiple studies, put together in the book The Number Sense, by Stanislas Dehaene, showed that we may possess a “sense of number”, perhaps inborn or nevertheless deeply rooted in our brains, such that the evolution to understanding the world through numbers seems inevitable — preprogrammed. Dehaene and his team tried first to understand the physiological foundations of the numerical and then, they looked for its origins.

A series of experiments showed that children, as early as a couple of months old, react noticeably differently to a situation which shows correct computations such as 2 + 1 = 3 than to one which shows impossible results such as 2 + 1 = 2. Moreover, the results were still true if the researchers changed the objects used to represent the operations (dolls). It could follow then that we are hardwired, programmed in some sense to think numerically and more pretentiously expressed, to abstract away objects and obtain numbers. Since months-old infants are not distracted by colors and shapes and extract quantitative information, it could follow that a sense of number is indeed inevitable.

Critiques could say that this is going too far. The fact that an infant “understands” in its own way that 2 + 1 = 3 or 1 + 1 = 2 does not mean in general that it has numbers deeply rooted in its brains. Which could be true. But at the same time, one cannot neglect the evidence and our own experience. The whole learning process relies both on constructions which start from previous knowledge, as well as reductionist decomposition of ample concepts until one finds elements that are already known or as close as possible to them. That being the case, a counterargument could also say that Dehaene’s experiments showed a foundation upon which one can build further, through learning processes which work by accumulation as mentioned above, the whole understanding of numbers. There are also cases when the upbringing and further education of an individual come to counter and inhibit certain instincts or primitive vestiges that our biology keeps. In other words, Dehaene’s research does not exclude the possibility that a child becomes an adult who doesn’t know what numbers are. There are even reports and research of cases which exhibit neurological disorders where the brain centers responsible for numbers could have unpredictable behaviors. An example is acalculia or even epilepsia arithmetices. Pushing this further, one could imagine a rewiring of our brains such that numbers become no more than signs on paper, without any meaning or use.

How would the world look like then? What would change, what would be the same?

What do you think?

[1] Professor Ornea’s idea is only that there are no physical instances of numbers other than zero and one. The rest of the discussion and the development I propose here are my own.